Go deeper: Improve industrial wastewater assets beyond the big-ticket items with advanced analytics applications

In process manufacturing environments, facility operations like wastewater treatment and neutralization are often taken for granted, but these systems must always be ready to ensure upstream operational uptime. Because these sorts of auxiliary utilities are essential for profitable manufacturing, a conservative stance is frequently taken when evaluating new technologies.

Change and upgrade typically introduce impacts to methodologies, chemistry and setpoints, and anticipating the required adjustments is a challenge using antiquated analytical tools like spreadsheets.

When subject matter experts (SMEs) attempt to quantify the impacts of these changes or study other issues, there is often limited time for analysis, along with barriers for bringing all relevant data together. Advanced analytics applications help bridge the gaps among multiple sources, connecting data streams like wastewater treatment with other manufacturing information.

Using data to back decisions made in manufacturing environments is becoming increasingly standard, and as industrial wastewater operations look to modernize, embracing this trend is imperative.

Challenges to distributed data analytics

When performing data analysis in industrial settings, it is normal to focus on the big-ticket items as a source for possible improvement. In wastewater treatment, this includes assets like neutralization tanks, settling basins, digesters and sanitizers. These assets are typically seen as “valuable” enough to justify the time and effort to combine the required data into a spreadsheet, verify validity, align, cleanse and finally perform the analysis of interest to make a data-based decision.

Meanwhile, numerous other assets and processes do not receive the same scrutiny and are frequently left in set-it-and-forget-it mode, as long as they do not introduce known problems.

Between the sometimes-competing challenges of meeting industrial process regulatory requirements and adapting to upstream changes in chemistry or process conditions, performing the analyses required to continually improve smaller operations can get lost in the background. In the best cases, occasional improvements may be made, but these are usually based only on tribal knowledge or hunches unsubstantiated by analysis.

As industrial wastewater teams look to modernize operations, the ability to develop and implement analytics at scale across a facility — not just on the largest or most valuable assets — is becoming the norm. Additionally, cross-team collaboration is more important than ever, with the advent of enhanced insight-sharing and reporting tools, which make it easier to ensure optimal operations and transparency among multiple operating and engineering groups. Greater collaboration capabilities can be the difference between meeting or falling short of aggressive companywide initiatives, such as energy reduction or improved sustainability.

Breaking the barriers to go deeper with advanced analytics

Cloud-hosted, advanced analytics applications provide solutions to these and other decision challenges often present today. These software tools achieve this by first establishing a live connection to all existing data sources throughout a facility, not limited to manufacturing operations. This effectively breaks down the silos containing data necessary for performing analytics across the entire industrial treatment process, including upstream and incoming processing changes.

Using these applications, SMEs can quickly build calculations with simple point-and-click tools for cleansing, contextualizing and process modeling to perform diagnostic, predictive and descriptive analytics on operational and equipment data. Empowered with these tools, the engineering hours required for analysis can be dramatically reduced, eliminating large quantities of time wasted with manual data wrangling and sorting through spreadsheets. This frees up skilled staff for other tasks, such as process optimization and improvement. And it can increase the breadth of analyses beyond just the highest-value, largest assets at a site, bringing about the rewards of several distributed improvements plantwide.

Additionally, with the ability to ingest or create an asset hierarchy within the data that reflects equipment at the facility, analytics that have been performed on a single asset can be easily replicated at scale across an entire fleet, enabling easy monitoring of numerous assets that might have been overlooked in the past due to the perceived return on investment of time. These results can then be captured and communicated within the broader organization. This alleviates the siloed, time intensive and error-prone analyses that occur when organizations are dependent on spreadsheet applications for data analysis.

Optimizing a chemical dosing application

The process of neutralizing, flocculating, softening or otherwise restoring process waster to the quality required for disposal or re-use in the facility typically requires large amounts of chemicals for treatment. Quantifying the exact volume of chemical required for treatment — whether pH neutralization, biological load sanitization or another process — was historically difficult to determine in a continuous fashion. As a result, many industrial facilities end up over-treating their wastewater, using more chemicals and energy than necessary.

By implementing Seeq, an advanced analytics application, a dairy-based food and beverage manufacturer was empowered to conduct analyses for dialing in precise chemical dosing calculations, with newly-found time as a result of reduced manual data wrangling. By leveraging a live connection with process data, the facility treatment engineer analyzed historical data, comparing bio-load in the water’s waste-activated sludge with the amount of chemicals used for treatment.

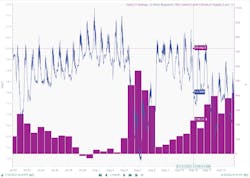

These chemical quantities tended to be based on a single worst-case scenario, rather than actual contamination conditions. By creating a conversion table of chemicals required to treat each given bio-load, the engineer analytically quantified the amount of chemical that could have been saved if using a dynamic control strategy rather than with worst-case dosing. Coincidently, the old setpoint did not always treat wastewater adequately (Figure 1).

Over the course of this investigation, the engineer determined a dramatic average overtreatment of wastewater. By adjusting the control strategy to variably dose based on process levels, the facility reduced chemical costs, saving hundreds of thousands of dollars each year.

Exploring and quantifying automatic control improvements

In addition to developing control strategies, making improvements within process logic and the control loops themselves can unleash hidden goldmines of savings. Control systems in wastewater treatment processes are typically designed and implemented during facility buildout, and they are rarely revisited during upgrades because of the data-intensive nature of analyses to characterize each control loop.

At a large pharmaceutical manufacturer’s wastewater treatment plant, engineers sought to investigate the efficacy of several control loops throughout the facility. Stuck with existing disparate databases and spreadsheet methods, this effort would have required significant time to complete, even with a limited scope. Using Seeq, the engineering team quickly connected, cleansed and contextualized data from their various control loops. Then, they quantified metrics for these loops, such as time spent within a percentage of setpoint, travel from setpoint, time to reach setpoint and more (Figure 2).

Leveraging Seeq’s ability to create ad-hoc asset structures, the group combined lab and manufacturing data into a single asset structure focused only on control loops of interest. Next, they scaled out the analysis from one loop to several others within the facility. As a result of this, the engineering group identified opportunities for improvement by labeling the least efficient loops, along with ways to tune the loop or replace equipment accordingly. This resulted in an average 50% reduction in time to reach setpoints throughout multiple treatment processes facility-wide.

Conclusion

Remaining competitive in today’s fast-paced manufacturing environment requires operational agility and plant optimization at every point of a process. Left to the tools of yesterday, it is difficult for process manufacturers to properly analyze, operate and manage industrial assets and processes, but today’s modern software tools make these tasks much more tenable.

Advanced analytics applications connect data among previously disparate sources, quickly generate insights and foster information sharing among multiple teams within an industrial organization, helping empower process optimization and smooth collaboration among facility staff. By leaning on these tools, SMEs can spend less time worrying about data wrangling and contextualization, and more time examining insights with team members to improve plantwide performance, sustainability and profitability.

Sean Tropsa began his career as an engineer in the specialty manufacturing and semiconductor manufacturing sectors. Here, he learned how to analyze large data sets, with an emphasis on root cause analysis and continuous process improvement to solve a variety of problems. Leveraging this background in his role as senior analytics engineer at Seeq, Sean helps companies improve their analytics, enabling engineers to attain actionable insight from their data. He holds BS and MS degrees in Chemical Engineering from Arizona State University.