Managing the water footprint of data

Our modern world is defined by data — its consumption as well as its generation. The exponential growth of data in recent years is undisputed yet hard to comprehend. Every day, there are 500 million tweets, 294 billion emails, 65 billion WhatsApp messages, 5 billion internet searches, and 95 million Instagram photos and videos .1 The accumulated digital universe of data will grow from 4.4 zettabytes in 2019 to 44 zettabytes by 2020 (A zettabyte [1021] is approximately equal to a trillion gigabytes [109 bytes]).

Entertainment data has long been a significant driver of this, but Internet of Things devices, artificial intelligence , and productivity data are now major contributors. According to a report from IBM Marketing Cloud, “10 Key Marketing Trends For 2017,” 90 percent of the data created by humanity was produced in the last few years.2 The size of the digital universe is expected to double every two years — a 50-fold growth from 2010 to 2020.

The majority of data are stored in data centers, with less and less being stored long term at user endpoints (e.g., our smartphones). We can get a sense for this by looking at our photo albums, where only a portion of the photos remain stored on our phone and the rest are backed up to the cloud.

The cloud is another word for the collection of data centers located across geographies interconnected with fiber optic cable. The shift to cloud-based data and services must be matched by an increase in the number of data centers, as well as an increase in the size of data centers — enter hyperscale, the very large, highly connected campuses that are becoming the backbone of the modern digital age.

The term hyperscale refers to a computer architecture’s ability to scale in order to respond to increasing demand. Hyperscale is not just the ability to scale but the ability to scale big and quickly.

Synergy Research estimates that there are 504 hyperscale data centers around the world with another 151 in planning that have been publicly announced.3 According to data gathered by Synergy, it took two years to build more than 100 hyperscale data centers, but the rate is predicted to accelerate substantially over the coming years due to increased use of cloud resources. Moreover, Cisco predicts hyperscale data centers will represent nearly 70 percent of the total data center processing power by 2021.4

Data-Water Nexus

Data storage and processing generate heat that must be removed from the hardware to prevent it from failing. Our personal computers have small fans that pull air across a heat exchanger to remove the heat. A hyperscale data center generates so much heat that large cooling infrastructure must be constructed. In general, this includes computer room air coolers (CRACs) that blow cool air into the data halls, heat exchangers that cool air with chilled water, and cooling towers or media that use evaporative cooling to remove the exchanged heat from the water. The evaporative cooling system must be constantly fed fresh water to make up for the evaporative losses. The evaporative cooling system is also consistently discharging concentrated blowdown as wastewater. This water demand and wastewater generation — both of which can be millions of gallons per day — is the water footprint of data and why water and wastewater treatment is a critical factor in siting and operating large data centers.

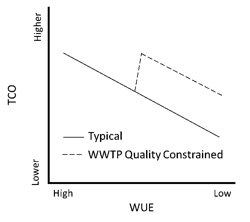

Figure 1: TCO - WUE Relationship

The Green Grid (www.thegreengrid.org) has developed several metrics, including water usage effectiveness (WUE), to measure a data center’s efficiency. The WUE is a metric that divides the annual water usage by the IT power. The units of WUE are liters/kilowatt-hour (L/kWh). Where no water is used for cooling, the WUE has a value of 0. The national average WUE is 1.8 L/kWh. Using the average WUE, a 100-megawatt data center campus would have a water demand of about 1.1 million gallons per day. With smaller data centers, access to water from the municipal potable supply and blowdown discharge to the municipal sewer do not pose a problem. However, as data centers scale up, municipal infrastructure may not be able to accommodate the water usage of the data center operations; thus, the decision analysis around water supply and wastewater discharge increases in complexity.

Hyperscaling Water and Wastewater

Data center siting is often driven by price of land and power, and access to the fiber network. Water supply and wastewater discharge are not often evaluated thoroughly in site selection and may not even be considered until after a site is purchased. This approach poses a risk to hyperscale data center companies’ water supply, and wastewater may have a significant effect on cost of operations and facility reliability.

Wastewater Discharge

One of the most important metrics for data center operations is the total cost of ownership (TCO), which includes the cost of the facility plus the costs of operation. Reducing the operational costs improves the TCO. While power, labor, equipment, and maintenance dominate the operational costs with smaller facilities, water’s clout increases with hyperscale-level demands. Decisions related to source supply and treatment, as well as wastewater discharge, can have a significant impact on TCO. While discharging blowdown wastewater to a local utility may offload compliance risk, a thorough review of the utility wastewater treatment plant is recommended to mitigate unforeseen financial risk.

For example, in the southwestern United States, many receiving waters are impaired and have discharge limits associated with metals and total dissolved solids. The data center operator may realize a TCO benefit by increasing the cycles of concentration (CoCs) on the cooling towers and thus reducing water demand. This approach also reduces the wastewater discharge flow rate but increases the concentration of constituents in the wastewater.

CoCs = WUE = Wastewater Discharge = Discharge Concentration

If the concentration of a critical parameter, such as arsenic, increases above the pretreatment discharge limit, then the data center would incur additional treatment costs, causing a jump in operating costs and the TCO–WUE relationship to diverge (see Fig. 1).

Reliability

A core commitment that data center operators make to their customers is guaranteed availability. The industry standard for data center availability 99.999 percent, which equates to interrupted service only 5.25 minutes per year. The reliability of water supply and supply quality are critical for meeting this commitment to customers. According to a report by Vertiv, 11 percent of data center outages in 2016 were associated with water, heat, or CRAC failure.5 In order to mitigate the value at risk-associated loss of supply, hyperscale data center operators should consider the following:

• While utilities can consistently provide high quality supply, the reliability requirements of the data center industry may not align with water treatment industry standards. A thorough condition assessment and vulnerability evaluation of the utility infrastructure should be performed to identify and prioritize infrastructure hardening.

• Providing redundant power supplies is a normal task in data center development. Backup supplies may be a separate grid (electric plus gas), batteries, or diesel generators. However, this practice has not become common for water supply. Rarely is a backup supply secured or integrated into the data center infrastructure so that it is immediately available in the event of a primary supply outage.

• Operational flexibility should be improved in order to accommodate variations in source water quality. Systems operating at high CoC and therefore high water efficiency are more vulnerable to water quality variability. Where water supplies can vary in quality or where multiple sources are used, additional pretreatment of make-up water and sidestream treatment of cooling water may be beneficial.

• Many utility planners anticipate that climate change will adversely affect water supplies with shortages. Water supplies dependent on runoff and snowmelt are particularly vulnerable. Data centers can improve water supply resiliency through diversification of supplies, water conservation, and water reuse.

Conclusion

Data center companies, especially hyperscalers, are facing challenges with regards to reducing costs, managing risks, and assessing the impacts of climate change variable water supplies. Organizations that proactively embrace the importance of early water and wastewater management and assess the impact on cost and risk will be more competitive and better meet their reliability commitments to customers. IWW